DhiLayer: AI Testing & QA

Evaluate AI models, features, and outputs before deploying them into your business. Ensure reliability, security, and fairness with Dhinker’s DhiLayer.

Overview

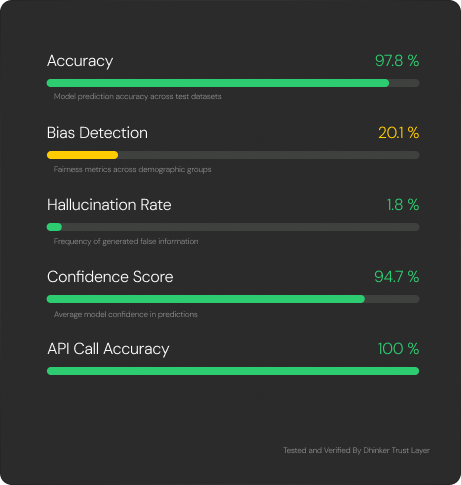

The DhiLayer by Dhinker is our QA and benchmarking service for AI systems. We simulate edge cases, identify hallucinations, and validate AI performance using our custom testing frameworks.

What You Get

Accuracy Testing

Comprehensive evaluation of model accuracy, precision, and recall across various scenarios.

Bias Detection

Identify and measure bias in AI models to ensure fair and ethical outputs.

Performance Reports

Detailed benchmarking reports with actionable insights for improvement.

Security Review

Comprehensive security and data compliance review for your AI systems.

Testing Process

Assessment

Initial evaluation of your AI system and identification of testing requirements.

Testing

Comprehensive testing using our custom frameworks and edge case simulation.

Analysis

Deep analysis of results and identification of potential issues and improvements.

Report

Detailed report with findings, recommendations, and actionable next steps.

Performance Metrics

Precision

Accuracy of positive predictions

Recall

Ability to find all relevant instances

Performance

Overall system performance score

Ideal For

AI Developers

Product Teams

Startups

Ready to Validate Your AI?

Get comprehensive testing and validation to ensure your AI systems are reliable, secure, and ready for production.